What is an AI-Native Product?

Tom Johnson ![]() Tom Johnson Former Head of Design at Basedash

· July 14, 2025

Tom Johnson Former Head of Design at Basedash

· July 14, 2025

Tom Johnson ![]() Tom Johnson Former Head of Design at Basedash

· July 14, 2025

Tom Johnson Former Head of Design at Basedash

· July 14, 2025

We’ve all seen the explosion of AI features slapped onto products over the past year. Little Copilot buttons in the corner. Chat sidebars. Query autocompletion. All branded with that same pitch: “Now with AI.”

But here’s the thing: AI isn’t new anymore. And “Now with AI” doesn’t mean much.

The real shift is deeper. We’re entering a new era where the interface itself is AI. Not just a helper bolted on to the side, but the primary surface through which the product is used.

That shift requires a completely different way of thinking about what a product is. It’s not just about adding AI. It’s about building for AI from the ground up. About letting go of rigid flows and fixed UIs. About treating the model as a first-class operator, not a second-class citizen.

I think there are three kinds of AI products right now:

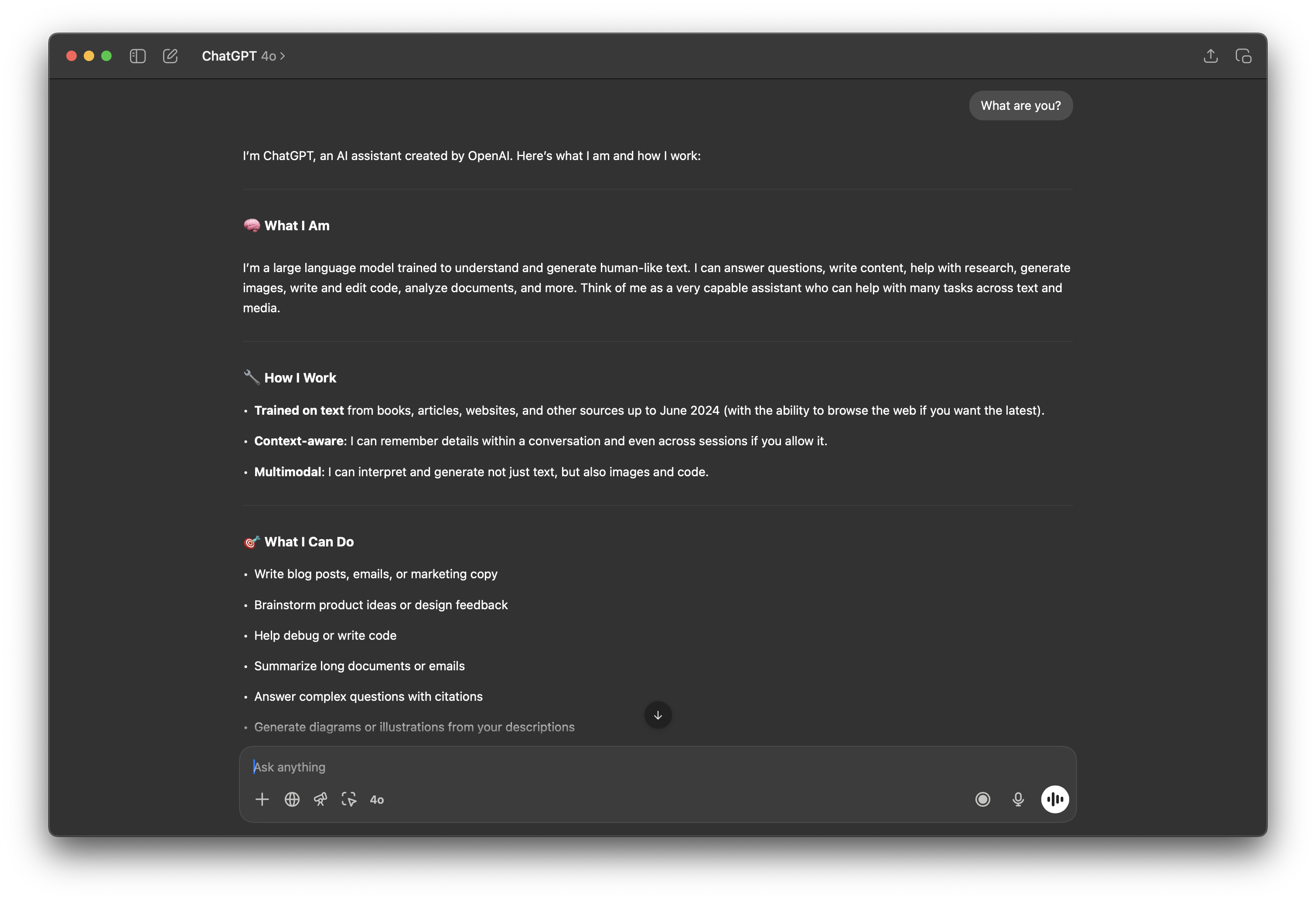

Think: GPT, Claude, Grok, Gemini. You show up, and the product is the intelligence. There’s not much beyond the prompt. The value lives entirely in the model’s capabilities. The UX is all driven by how well the assistant can render new things in line in the conversation. These in their current state are a chatbot with threads, where you can ask anything, and they leave their functionality open to your prompt.

In the future, I see these functioning more like the operating system than the app that lives on your home screen. Instead of talking to an app, you’ll be talking to a context boundary, something that knows who you are, what data you’ve given it, and how to sort through all of the context. They’re the orchestration layer.

We’re still in the early days of what these mean.

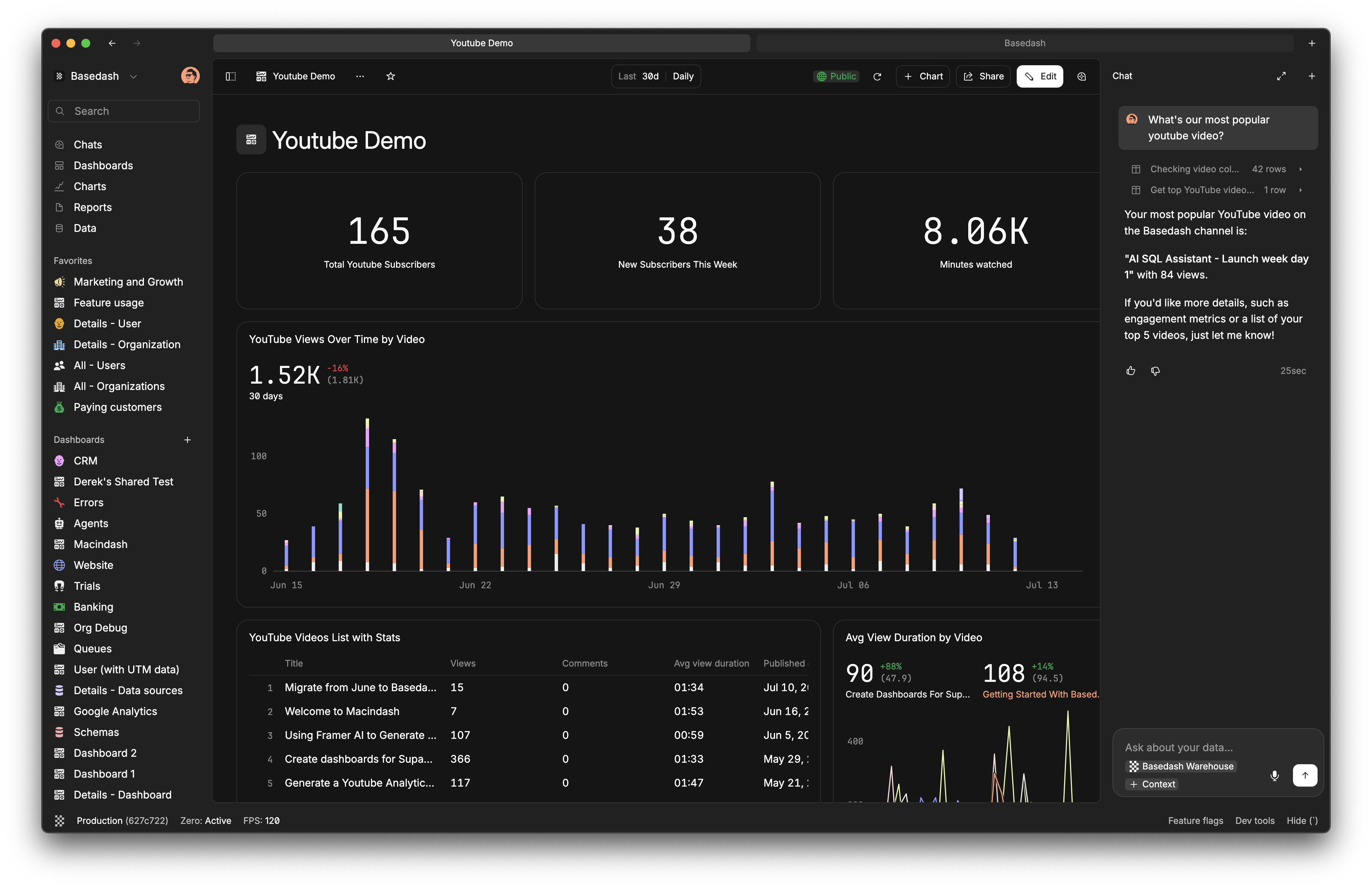

This is where we’re seeing a new category form. Tools like Cursor. Dia. Lovable. Basedash. Perplexity. These are apps where AI isn’t an add-on, it’s the way you use the thing.

Some of these are tighter context on the first category, like Perplexity, some of these are reimagined ways of interacting with existing tools, like Cursor does for VS Code.

Basedash is in this category kinda by accident.

Let me give you a little backstory.

When we were building the new version of Basedash—pivoting from an admin panel into a BI tool—we ran into some issues with how to do more complex controls like filtering, breakdowns, hooking data up to charts, etc.

They were deceptively tricky to design and implement. Not atypical for a complex tool, but user testing wasn’t showing the ease of use we had wanted. It would take time, it would take lots of revisions, and it would require a lot of complexity for us and for the user.

We had a “no code” way to create charts (which most other tools like Mixpanel, Metabase, etc also have) and a code based version. I’ll be clear — I was the biggest pusher for the no code way. I wanted to make sure that our charts weren’t just accessible to technical people. I wanted non-technical users to be able to make their own data visualizations as easy as possible.

I won’t go into the details too far, but because of that we had to support 2 different models of charts.

2 different ways to bind data to them.

2 different version controls.

2 different ways to do everything.

It was a lot to manage from a design perspective, it was confusing for users, and it was a lot we had to juggle on the architecture side of it.

But then we had a realization.

We realized that we could just make everything code, because the AI could write all of it. Every chart could be SQL under the hood, but “no code” in the creation experience.

Users could just create everything with AI. Just prompt it.

We had already experimented with bolting AI into Basedash Admin, our previous product. It worked, but only in a narrow sense. The app wasn’t designed for it, and it showed. The AI felt boxed in. It couldn’t do enough, and we couldn’t let go of the old scaffolding. There were a lot of interesting tests, but it was held back by the cage that it lived in.

But our new app was a different beast. There were no users. We didn’t have legacy constraints. And once we realized what the AI could do, everything changed. It wasn’t just a feature—it reframed the whole direction of the product.

We had this moment of clarity. If we were serious about making the fastest, easiest BI tool to use, then we needed to lean into AI and build for that.

Not beside it.

Not with it.

For it.

That meant pulling out features we had spent months building. (things that I really really loved the direction of) Filters, modals, column pickers—all the traditional BI plumbing. Not because it wasn’t useful, but because they were suddenly unnecessary.

Don’t get me wrong, we still have inputs and controls for users, but with an AI-Native app, users rarely use them because they don’t need to. We strive to get them 90% of the way there, and if you want to manually use controls, then go for it. But it’s not required.

When the AI could be the star of the show, we didn’t need an interface built for technical users. We needed one that could disappear when the user simply asked a question.

That moment changed how we thought about the product. We stopped thinking about how to help analysts build dashboards and charts, and started thinking about how to help anyone ask better questions. How to skip the middleman. How to make data usable for people who would never touch SQL.

And more importantly: how to build something where AI was the app. Not a floating assistant. Not a novelty. But the shell itself.

That’s when it started clicking. When the product started to feel lighter. Faster. More alive.

And that’s just getting started. We’re seeing other tools realize the same thing. If I could be a fly on the wall at The Browser Company when they decided to pivot from Arc to Dia, I’m absolutely sure that they had the same realization.

AI native is a totally different way to build.

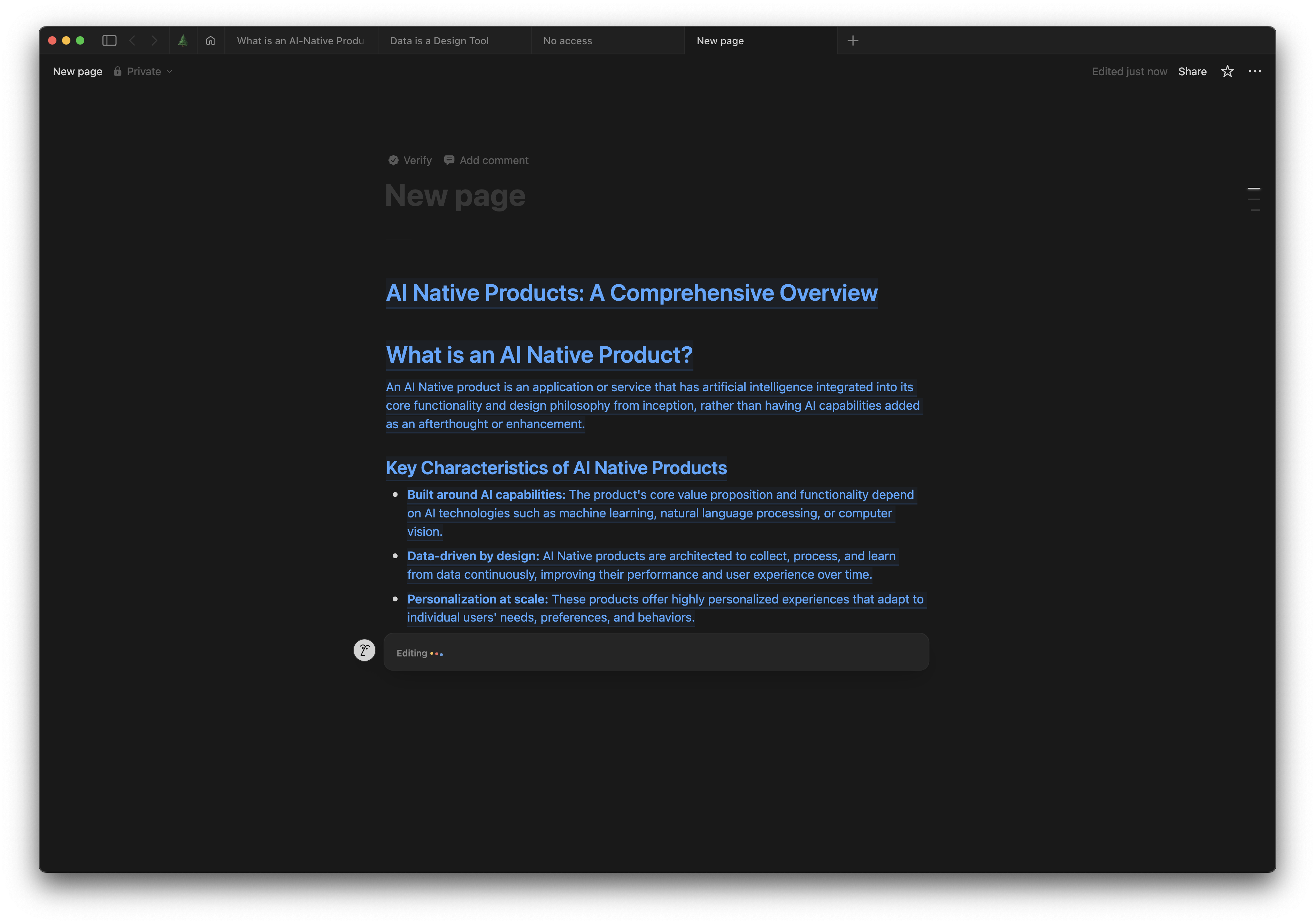

This is the category most apps fall into. They’ve added AI, but it doesn’t change how the product works. It helps. Sometimes. But it’s not foundational. It’s not the primary way you interact. It still requires you to do the heavy lifting.

Notion can summarize. Descript has some really cool AI add ons. Figma can rename your layers and is adding more AI products. Helpful, promising, and also in a lot of cases undeniably good. But a lot of these are still deeply tied to an old interaction model. It can range from building entirely new floors on the old house to new coats of paint depending on the tool. Sometimes that’s most of what you need, but if the trend continues they might be replaced by something really AI-native.

Because the truth is, most products can’t become AI-native. Not without tearing themselves down to the studs.

AI-native isn’t a feature. It’s an architecture.

It means giving the model control. Letting it make decisions. Letting go of fixed navigation. Letting intent drive flow. Loosening what information architecture even means.

It totally unlocks things. Suddenly voice can become a viable primary input. Multi-modal makes sense. The app stops being a canvas and starts being a channel where it’s not about making features that users will discover, but about discovering latent intent, and making sure the AI has the tools to reply effectively.

The days of feature checklists won’t matter as much. What will matter is how deeply the model understands your user’s context. How much of your intent it can act on. How well it can fade into the background until it’s needed—and then show up exactly where you are.

It changes what a “user” even is. The person asking the question becomes the person using the product. Not the analyst writing the query. Not the engineer wiring the dashboard. Just the person with the question. Direct to the source.

That shift, from serving the builder to serving the asker, is massive. It deserves its own post. I’ll write about that later.

Because the next generation of tools isn’t really about better dashboards or prettier interfaces. It’s about collapsing the distance between thought and action. About building tools that don’t need to be taught. That don’t have submenus, power user features and UI complexity.

That just do what they are TOLD to do.

We’re not there yet. AI is still not great in a lot of ways. Slow, limited, overburdened by context limits, expensive, and open ended. It’s a shift from telling users “here’s what you can do and how to use our app” to “what do you want the app to do for you and how are you going to ask it?”

The models will continue to improve. New modalities and input methods will start to emerge. User expectations will grow faster than Moore’s law. We’re already seeing it. This is just the beginning.

The apps that embrace this, that rebuild themselves around AI instead of adding it in after the fact, will feel different.

They’ll feel like magic.

And they’ll win.

Written by

Former Head of Design at Basedash

Tom Johnson is a product designer and former head of design at Basedash, where he helped shape the product experience, interface systems, and design direction of the platform. His work focuses on design systems, interaction design, and translating complex technical workflows into products that feel clear, fast, and intuitive.

Basedash lets you build charts, dashboards, and reports in seconds using all your data.