Airflow MySQL Operator Guide

November 10, 2023

The Airflow MySQL Operator is a dynamic tool for integrating MySQL databases into Apache Airflow workflows. It allows for the execution of MySQL commands within an Airflow DAG (Directed Acyclic Graph), providing a seamless and efficient way to manage database operations in conjunction with Airflow's robust scheduling and monitoring capabilities.

Understanding the MySQL Operator

Overview

At its core, the MySQL Operator enables the execution of SQL queries in a MySQL database. It is particularly useful for tasks like data extraction, transformation, and loading (ETL), as well as database maintenance and analytics.

Key Features

- SQL Execution: Execute any MySQL query.

- Parameterization: Supports parameterized queries to prevent SQL injection.

- Flexibility: Can be used in various stages of a data pipeline.

Implementing the MySQL Operator in Airflow

Preparing the MySQL Hook

Before using the MySQL Operator, set up a MySQL Hook to establish a connection to your MySQL database. Define the connection parameters in Airflow's UI under Admin -> Connections.

from airflow.providers.mysql.hooks.mysql import MySqlHook mysql_hook = MySqlHook(mysql_conn_id='your_connection_id')

Using the MySQL Operator

Basic Usage

To use the MySQL Operator, first import it, then define the task in your DAG. Here's a simple example that executes a SQL query.

from airflow.providers.mysql.operators.mysql import MySqlOperator # Define the task mysql_task = MySqlOperator( task_id='mysql_example', mysql_conn_id='your_connection_id', sql='SELECT * FROM your_table;', dag=dag )

Parameterized Query Example

Parameterized queries enhance security by preventing SQL injection. Here's how to implement them:

sql_query = """ SELECT * FROM users WHERE status = %s; """ params = ('active',) mysql_task = MySqlOperator( task_id='mysql_parameterized_query', mysql_conn_id='your_connection_id', sql=sql_query, parameters=params, dag=dag )

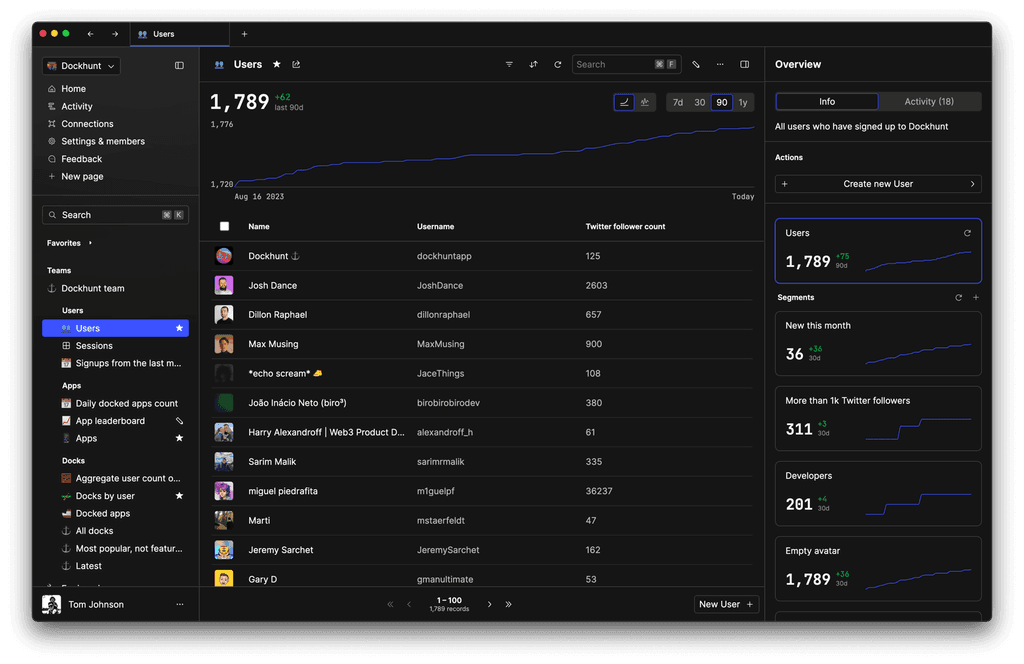

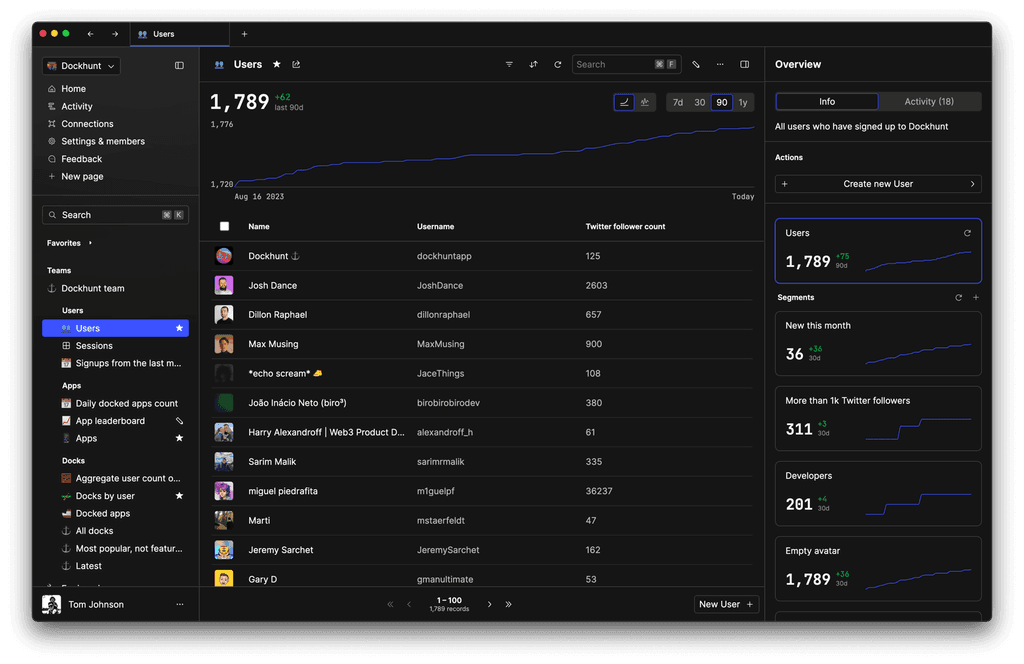

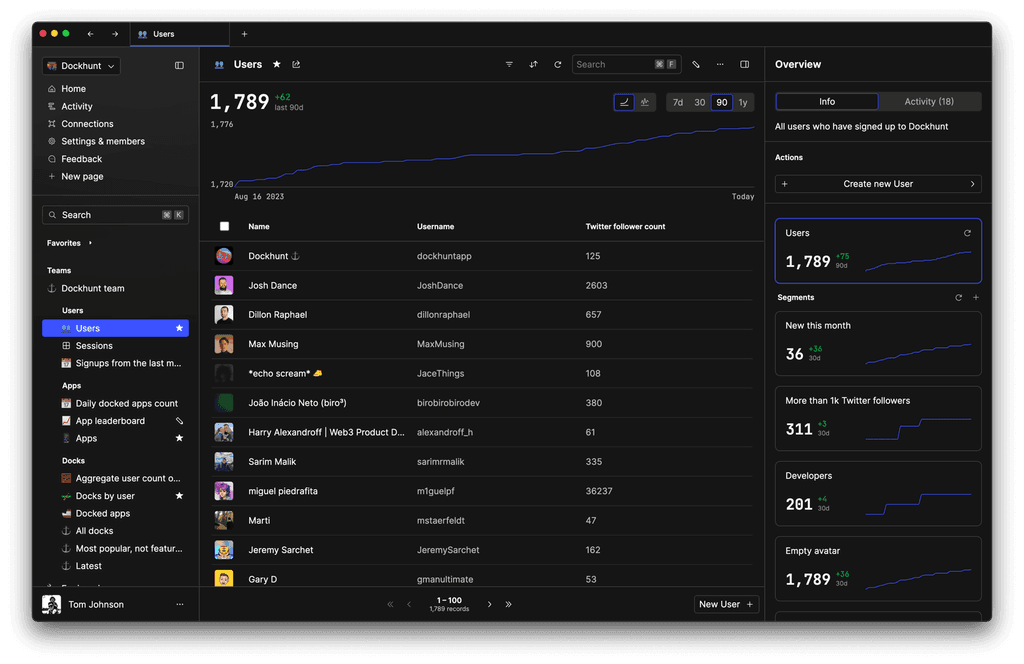

You could ship faster.

Imagine the time you'd save if you never had to build another internal tool, write a SQL report, or manage another admin panel again. Basedash is built by internal tool builders, for internal tool builders. Our mission is to change the way developers work, so you can focus on building your product.

Integrating MySQL Operator in Complex Workflows

Combining with Other Operators

The MySQL Operator can be combined with other operators like PythonOperator or BashOperator for complex workflows. For instance, you might use a PythonOperator to process data before loading it into MySQL.

Handling Dependencies

Ensure that your tasks have the correct dependencies. Use set_upstream or set_downstream methods, or the bitshift operators (>>, <<) to define task order.

Error Handling and Best Practices

Exception Handling

Always include exception handling in your tasks to manage potential failures.

Logging

Utilize Airflow's logging capabilities to keep track of task execution and diagnose issues.

Resource Management

Be mindful of the resources your queries consume. Optimize SQL queries for performance and efficiency.

Conclusion

The MySQL Operator in Apache Airflow offers a powerful and flexible way to integrate MySQL database operations into your data workflows. With its ability to execute complex SQL queries and integrate seamlessly with other Airflow components, it becomes an indispensable tool in the arsenal of data engineers and developers.

Remember, this guide is a starting point. Explore further customization and optimization based on your specific workflow needs.

TOC

November 10, 2023

The Airflow MySQL Operator is a dynamic tool for integrating MySQL databases into Apache Airflow workflows. It allows for the execution of MySQL commands within an Airflow DAG (Directed Acyclic Graph), providing a seamless and efficient way to manage database operations in conjunction with Airflow's robust scheduling and monitoring capabilities.

Understanding the MySQL Operator

Overview

At its core, the MySQL Operator enables the execution of SQL queries in a MySQL database. It is particularly useful for tasks like data extraction, transformation, and loading (ETL), as well as database maintenance and analytics.

Key Features

- SQL Execution: Execute any MySQL query.

- Parameterization: Supports parameterized queries to prevent SQL injection.

- Flexibility: Can be used in various stages of a data pipeline.

Implementing the MySQL Operator in Airflow

Preparing the MySQL Hook

Before using the MySQL Operator, set up a MySQL Hook to establish a connection to your MySQL database. Define the connection parameters in Airflow's UI under Admin -> Connections.

from airflow.providers.mysql.hooks.mysql import MySqlHook mysql_hook = MySqlHook(mysql_conn_id='your_connection_id')

Using the MySQL Operator

Basic Usage

To use the MySQL Operator, first import it, then define the task in your DAG. Here's a simple example that executes a SQL query.

from airflow.providers.mysql.operators.mysql import MySqlOperator # Define the task mysql_task = MySqlOperator( task_id='mysql_example', mysql_conn_id='your_connection_id', sql='SELECT * FROM your_table;', dag=dag )

Parameterized Query Example

Parameterized queries enhance security by preventing SQL injection. Here's how to implement them:

sql_query = """ SELECT * FROM users WHERE status = %s; """ params = ('active',) mysql_task = MySqlOperator( task_id='mysql_parameterized_query', mysql_conn_id='your_connection_id', sql=sql_query, parameters=params, dag=dag )

You could ship faster.

Imagine the time you'd save if you never had to build another internal tool, write a SQL report, or manage another admin panel again. Basedash is built by internal tool builders, for internal tool builders. Our mission is to change the way developers work, so you can focus on building your product.

Integrating MySQL Operator in Complex Workflows

Combining with Other Operators

The MySQL Operator can be combined with other operators like PythonOperator or BashOperator for complex workflows. For instance, you might use a PythonOperator to process data before loading it into MySQL.

Handling Dependencies

Ensure that your tasks have the correct dependencies. Use set_upstream or set_downstream methods, or the bitshift operators (>>, <<) to define task order.

Error Handling and Best Practices

Exception Handling

Always include exception handling in your tasks to manage potential failures.

Logging

Utilize Airflow's logging capabilities to keep track of task execution and diagnose issues.

Resource Management

Be mindful of the resources your queries consume. Optimize SQL queries for performance and efficiency.

Conclusion

The MySQL Operator in Apache Airflow offers a powerful and flexible way to integrate MySQL database operations into your data workflows. With its ability to execute complex SQL queries and integrate seamlessly with other Airflow components, it becomes an indispensable tool in the arsenal of data engineers and developers.

Remember, this guide is a starting point. Explore further customization and optimization based on your specific workflow needs.

November 10, 2023

The Airflow MySQL Operator is a dynamic tool for integrating MySQL databases into Apache Airflow workflows. It allows for the execution of MySQL commands within an Airflow DAG (Directed Acyclic Graph), providing a seamless and efficient way to manage database operations in conjunction with Airflow's robust scheduling and monitoring capabilities.

Understanding the MySQL Operator

Overview

At its core, the MySQL Operator enables the execution of SQL queries in a MySQL database. It is particularly useful for tasks like data extraction, transformation, and loading (ETL), as well as database maintenance and analytics.

Key Features

- SQL Execution: Execute any MySQL query.

- Parameterization: Supports parameterized queries to prevent SQL injection.

- Flexibility: Can be used in various stages of a data pipeline.

Implementing the MySQL Operator in Airflow

Preparing the MySQL Hook

Before using the MySQL Operator, set up a MySQL Hook to establish a connection to your MySQL database. Define the connection parameters in Airflow's UI under Admin -> Connections.

from airflow.providers.mysql.hooks.mysql import MySqlHook mysql_hook = MySqlHook(mysql_conn_id='your_connection_id')

Using the MySQL Operator

Basic Usage

To use the MySQL Operator, first import it, then define the task in your DAG. Here's a simple example that executes a SQL query.

from airflow.providers.mysql.operators.mysql import MySqlOperator # Define the task mysql_task = MySqlOperator( task_id='mysql_example', mysql_conn_id='your_connection_id', sql='SELECT * FROM your_table;', dag=dag )

Parameterized Query Example

Parameterized queries enhance security by preventing SQL injection. Here's how to implement them:

sql_query = """ SELECT * FROM users WHERE status = %s; """ params = ('active',) mysql_task = MySqlOperator( task_id='mysql_parameterized_query', mysql_conn_id='your_connection_id', sql=sql_query, parameters=params, dag=dag )

You could ship faster.

Imagine the time you'd save if you never had to build another internal tool, write a SQL report, or manage another admin panel again. Basedash is built by internal tool builders, for internal tool builders. Our mission is to change the way developers work, so you can focus on building your product.

Integrating MySQL Operator in Complex Workflows

Combining with Other Operators

The MySQL Operator can be combined with other operators like PythonOperator or BashOperator for complex workflows. For instance, you might use a PythonOperator to process data before loading it into MySQL.

Handling Dependencies

Ensure that your tasks have the correct dependencies. Use set_upstream or set_downstream methods, or the bitshift operators (>>, <<) to define task order.

Error Handling and Best Practices

Exception Handling

Always include exception handling in your tasks to manage potential failures.

Logging

Utilize Airflow's logging capabilities to keep track of task execution and diagnose issues.

Resource Management

Be mindful of the resources your queries consume. Optimize SQL queries for performance and efficiency.

Conclusion

The MySQL Operator in Apache Airflow offers a powerful and flexible way to integrate MySQL database operations into your data workflows. With its ability to execute complex SQL queries and integrate seamlessly with other Airflow components, it becomes an indispensable tool in the arsenal of data engineers and developers.

Remember, this guide is a starting point. Explore further customization and optimization based on your specific workflow needs.

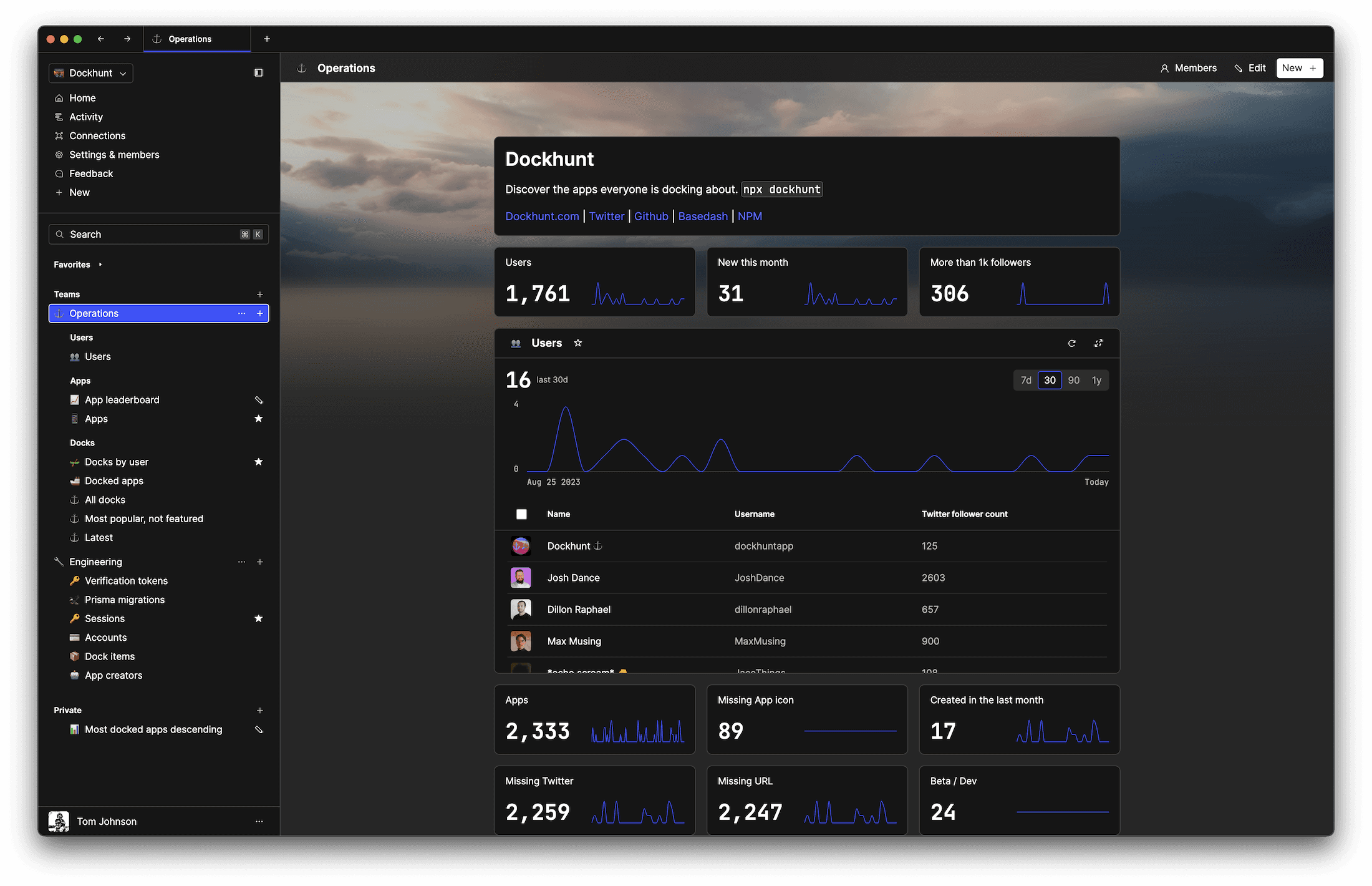

What is Basedash?

What is Basedash?

What is Basedash?

Basedash is the best MySQL admin panel

Basedash is the best MySQL admin panel

Basedash is the best MySQL admin panel

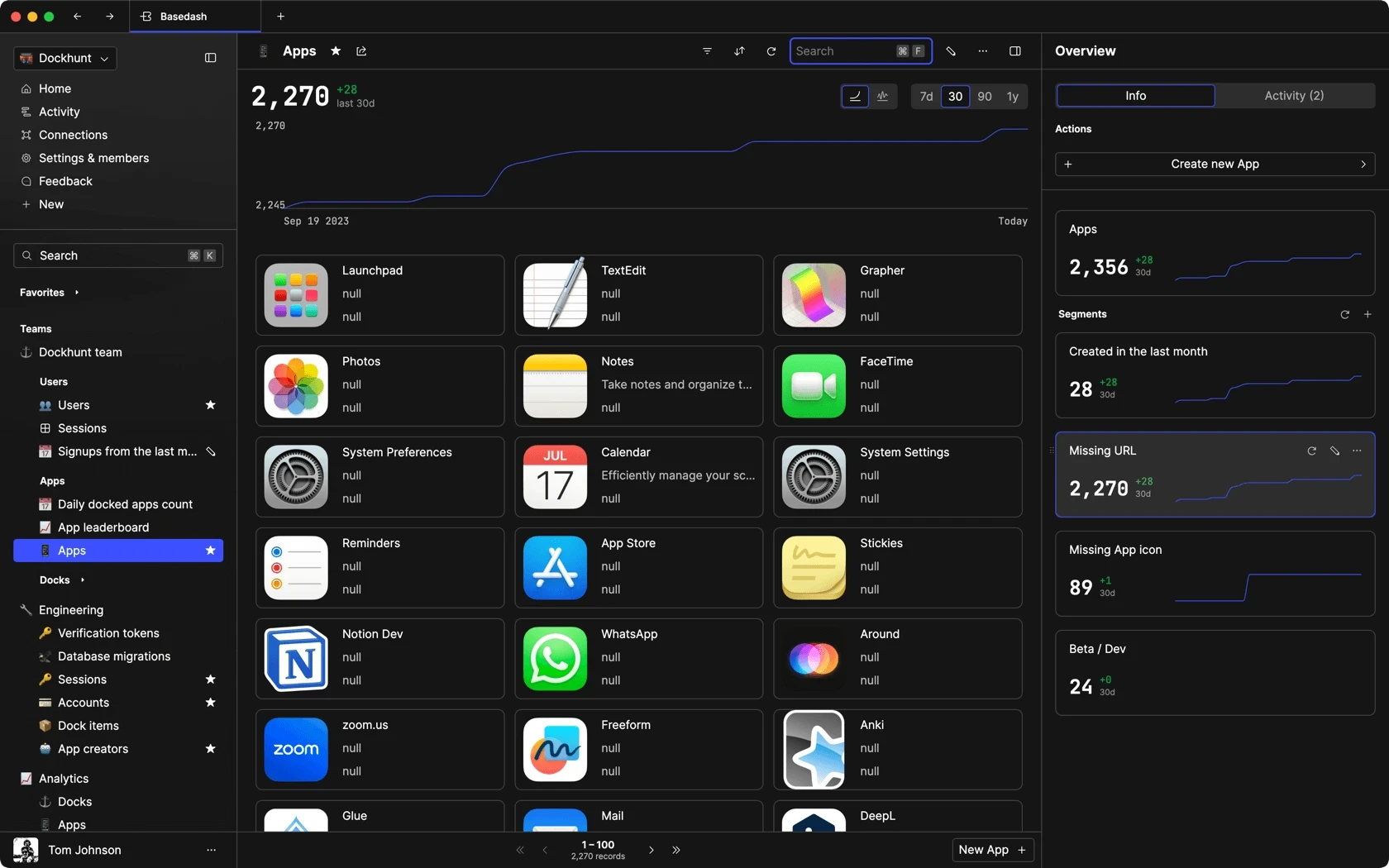

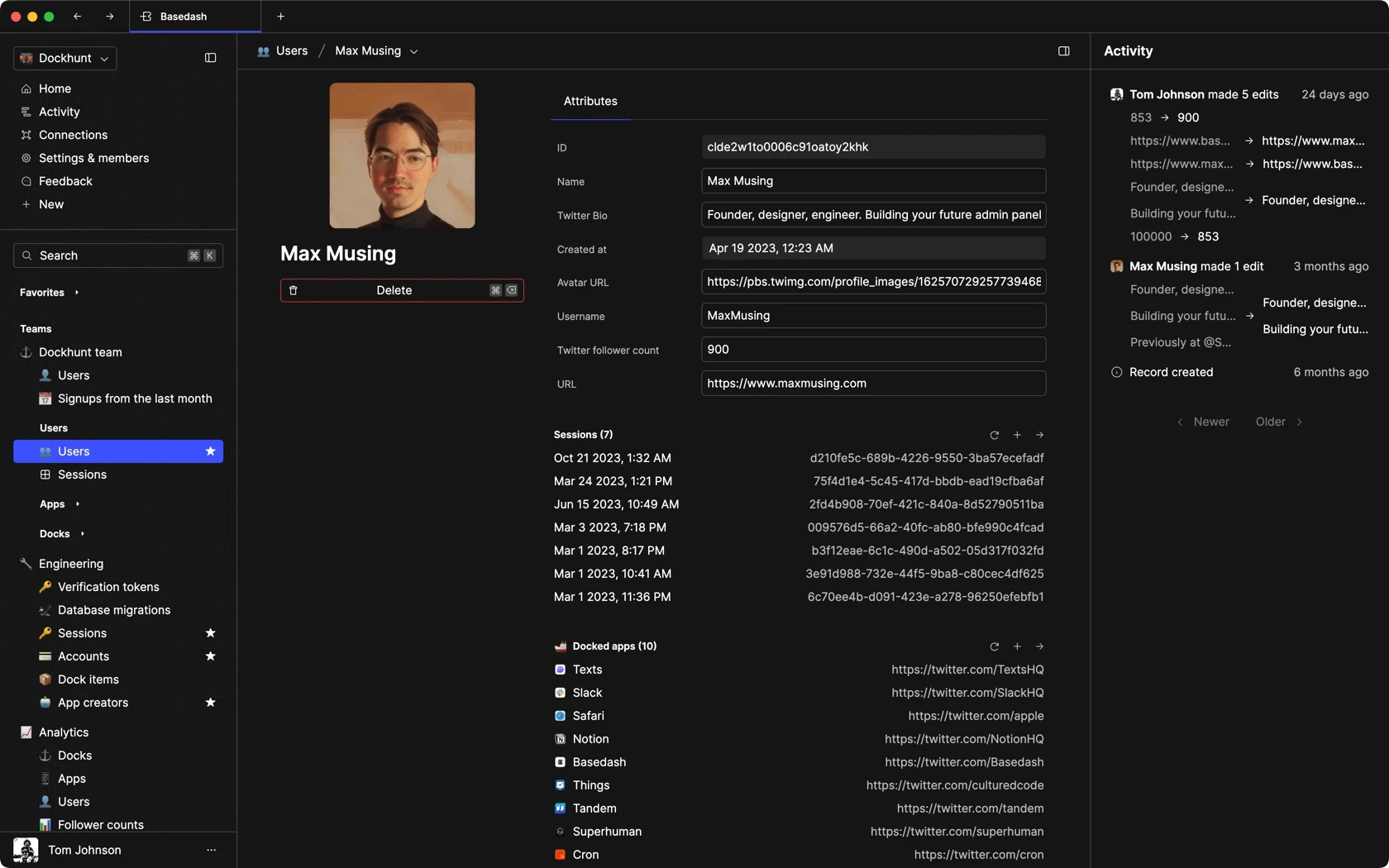

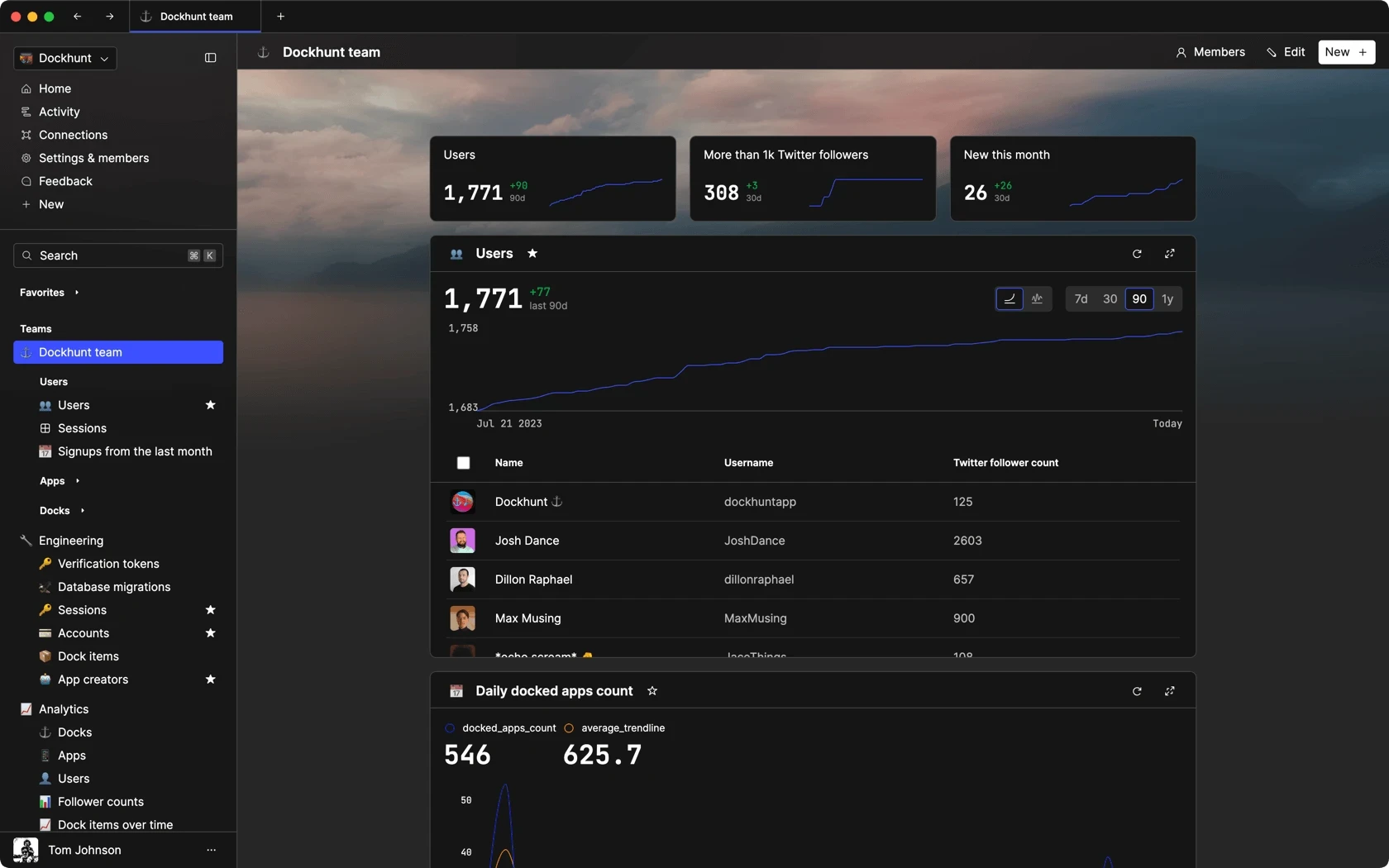

If you're building with MySQL, you need Basedash. It gives you an instantly generated admin panel to understand, query, build dashboards, edit, and share access to your data.

If you're building with MySQL, you need Basedash. It gives you an instantly generated admin panel to understand, query, build dashboards, edit, and share access to your data.

If you're building with MySQL, you need Basedash. It gives you an instantly generated admin panel to understand, query, build dashboards, edit, and share access to your data.

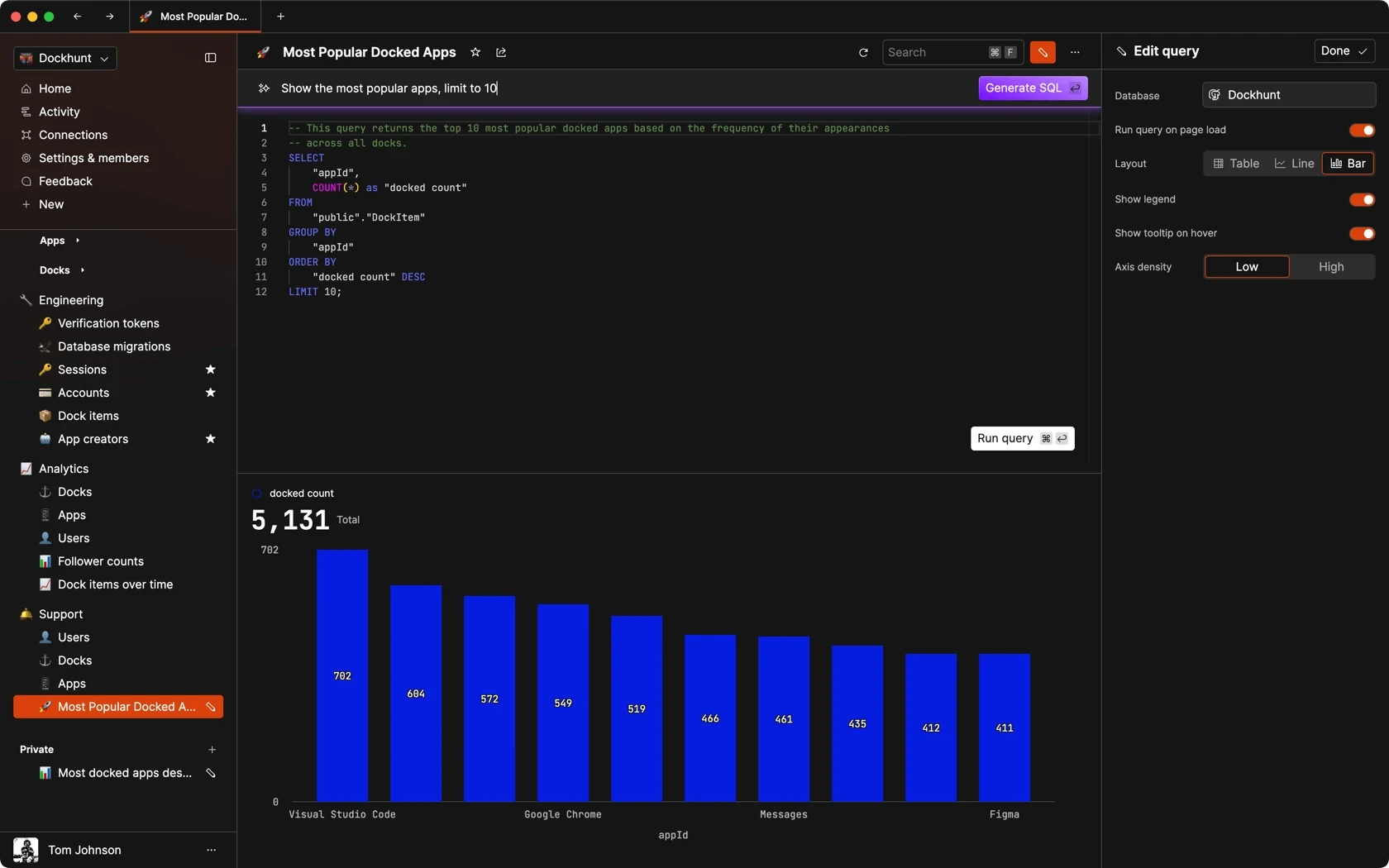

Dashboards and charts

Edit data, create records, oversee how your product is running without the need to build or manage custom software.

USER CRM

ADMIN PANEL

SQL COMPOSER WITH AI

Related posts

Related posts

Related posts

How to Add Columns to MySQL Tables with ALTER TABLE

Robert Cooper

How to Add Columns to Your MySQL Table

Max Musing

Pivot Tables in MySQL

Robert Cooper

How to Rename a Table in MySQL

Max Musing

How to Optimize MySQL Tables for Better Performance

Robert Cooper

How to Display MySQL Table Schema: A Guide

Jeremy Sarchet